The author’s views are entirely his or her own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

SEO testing expert Emily Potter joins us once again to wrap up this season of Whiteboard Friday! Today, she takes you through a few tests that generated unexpected results for her team at SearchPilot, and what those results mean for SEO strategy.

Enjoy, and stay tuned for the next season of Whiteboard Friday episodes, expected later this summer!

Click on the whiteboard image above to open a high resolution version in a new tab!

Video Transcription

Howdy, Moz fans. I’m Emily Potter. I’m Head of Customer Success at SearchPilot. If you haven’t heard of us before, we’re an SEO A/B testing platform. We run large-scale SEO tests on enterprise websites.

So that’s websites in industries like travel, e-commerce, or listing websites, anything that has lots of traffic and lots of templated pages. Today I’m here to share with you five of our most surprising test results that we’ve run at SearchPilot. Part of a successful SEO testing program is getting used to being surprised a heck of a lot, whether that’s because something you really thought was going to work ends up not, an SEO best practice test that ends up actually hurting your organic traffic, or something that you’ve tested just because you could that ends up being a winner.

All of our customers and us as well get surprised all the time at SearchPilot, but that’s what makes testing so important. If you’re a large enterprise website, then testing is what gives you a competitive edge. It helps you find those things that your competitors maybe wouldn’t, especially if they’re not testing, and it helps you stop yourself from rolling out changes that would harm your organic traffic that you maybe would have had you not been able to test them.

Or sometimes it’s as simple as giving you a business case to get the backing that you need to roll out something on your website that you were going to do anyway but maybe didn’t have the buy-in from other stakeholders. If you want to learn more about how we run tests at SearchPilot and how we control for things like seasonality, algorithm updates, and all that, go to our website and there’s lots of resources there.

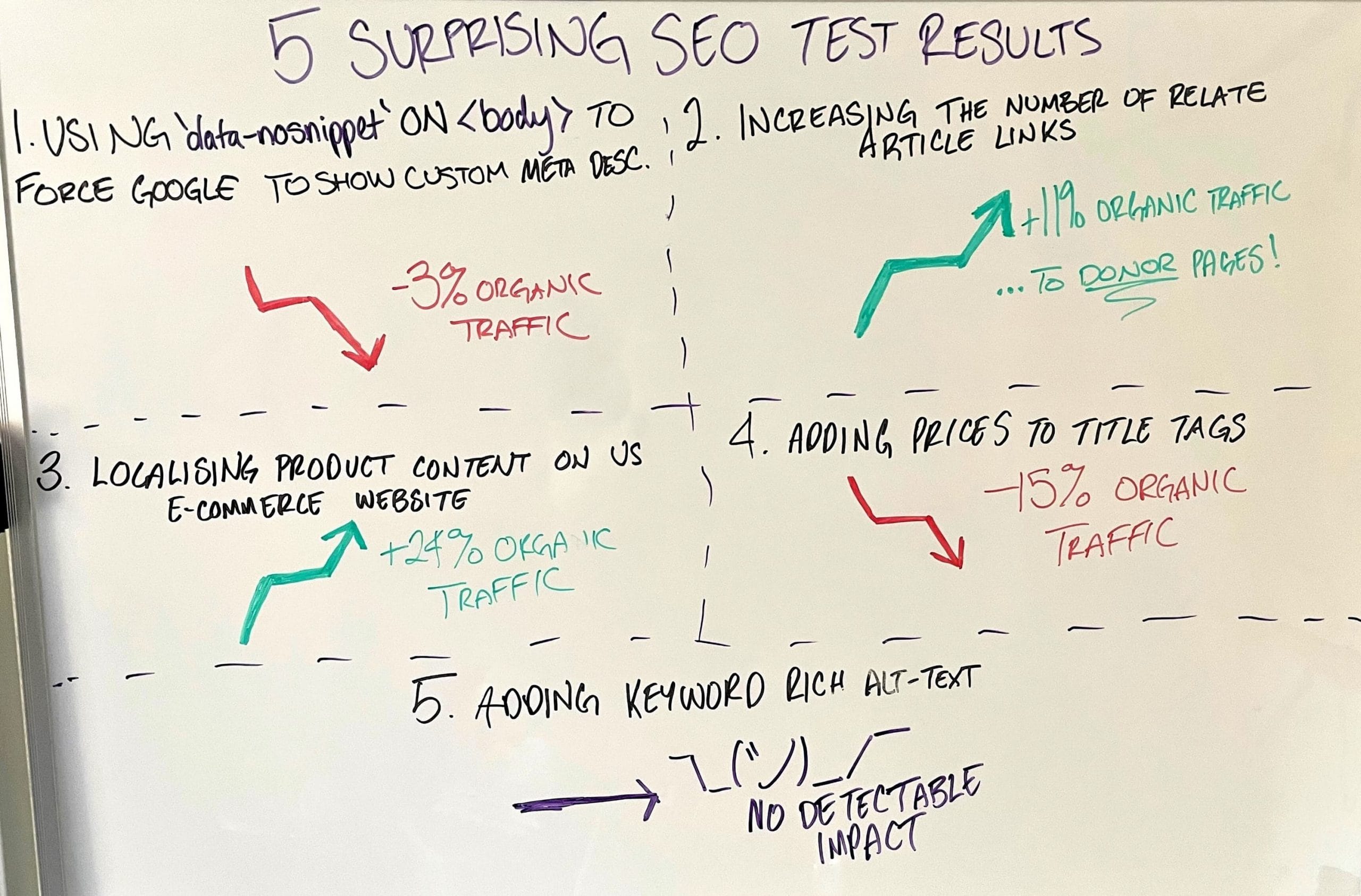

1. Using ‘data-nosnippet’ to force Google to show custom meta descriptions

Okay, the first test I’m going to share with you today is a customer that used the data-nosnippet attribute to force Google to respect its meta descriptions. As you probably know, Google now overwrites meta descriptions as well as title tags, and this can be really frustrating. In the case of meta descriptions, sometimes it brings in text that’s strung together with ellipses, it’s not very readable, it doesn’t have good grammar, and a lot of SEOs find this frustrating.

So to get Google to show our meta descriptions instead, our customer added the data-nosnippet attribute to the body tag. What the data-nosnippet attribute does is it tells robots, like Googlebot, I don’t want you to scrape any of this content. So by putting it on the body tag, we effectively forced Google to use what was in the head, i.e., the meta description.

As you can see, this was negative. It led to a 3% loss in organic traffic. As far as SEO tests go, that’s actually a pretty small loss, but that’s still not something you want to deploy and why would you lose any traffic at all if you know that’s something going to hurt. So in this case, it turns out Google maybe is actually better at writing meta descriptions than we are.

So maybe meta descriptions aren’t a thing we should be spending so much time on as SEOs. Meta descriptions we’re finding at SearchPilot are very hard to ever come up with something that’s positive, and oftentimes, we’ve run this a couple times on different industries and different websites, actually Google is better at writing them than we are anyway.

So maybe let’s just let the robots do the work.

2. Increasing the number of related article links

Our second test was on an e-commerce website. This was on the blog portion of their website, where they had blog content related to their products. At the bottom of every article, there were two related article links. In this test, we increased that from two to four.

Now running internal linking experiments is complicated because we’re impacting both the pages where we’re adding the links and we’re impacting the pages that receive the links. So we have to make sure that we’re controlling for both. Again, if you want to learn more about how we do that, you can check out our website or follow up with me after. Now, in this case, this was an 11% increase in organic traffic, which maybe doesn’t seem surprising because it’s links, we know that they work.

Why do I have this included on five surprising test results then? I have this included because actually this was to the donor pages. So by that I mean the pages where we added the links. The pages that were receiving the links, actually we didn’t see any detectable impact for organic traffic. That was really surprising, and it goes to show that links do more than just pass on link equity.

They actually help robots understand your page better. They can be a way to associate different bits of content together. So they actually might have benefit to both pages. This is also why it’s so important to make a controlled experiment if you’re doing internal linking tests. One, if we were just measuring the impact on the pages that were receiving them, we wouldn’t have found this one at all.

Or oftentimes, not often but sometimes at SearchPilot we’ve actually seen this be positive for one group and negative for another. So it’s really important to find out the net impact.

3. Localizing product content on U.S. e-commerce website

Our third test that I’m going to share with you today is when we localize content on product pages for an e-commerce website in the U.S.

So that was changing things like trousers to pants. This was a website that was originally based in the UK. They rolled out in the U.S. market, and they just kept the UK content when they did that. So we wanted to figure out what would happen if we updated that and made it actually fit the market that we were in. This was a 24% increase in organic traffic.

Now, to me, that was surprising the magnitude of how much of a difference that made. But I suppose that isn’t surprising if you think about it. If trousers doesn’t get very many searches per month in the U.S. but pants does, then I guess you would expect localizing that content to improve your organic traffic.

So places where this content existed was like the meta title, the meta description, H1, and things like that. If nothing else, this is just a nice indication that sometimes normal SEO recommendations actually work, and this was a great example of one that they were able to make a business case to get their devs to implement a change that they might not have been able to convince them was very important otherwise.

4. Adding prices to title tags

Test number four, adding prices to the titles. Again, an e-commerce website. You would think best practice recommendation have the price in the title. That’s something users want to see. But, as you can see here, this was actually negative, and it was a 15% drop to organic traffic, so pretty substantial.

Important context here though. One of our hypotheses was our competitors in the SERP weren’t using prices in the title tag but instead had price snippets that were coming from structured markup. So maybe users just didn’t respond well to seeing something different to what other competitors had in the SERP.

It’s also possible that our prices weren’t as competitive, and putting them front and center in our title tag didn’t help us because it made it clear that some of the other search competitors we had had better prices. In any case, we didn’t deploy this change. But this is an important lesson in no two websites are the same.

We’ve run this test a lot of times at SearchPilot, and we’ve seen positive, we’ve seen negative, and we’ve seen inconclusive results with this. So there is no one-size-fits-all approach with SEO, and there’s nothing that’s an absolute truth and even something as simple as adding prices to your title tags.

5. Adding keyword-rich alt text

The final test I’m going to share with you today was when we added keyword rich alt text to images on the product page. As you can see, this had no detectable impact, which this is a common SEO recommendation. This is a common thing that comes up in things like tech audits or big deliverables that you give to a potential new customer.

Here, we found it actually didn’t have much of an impact. That suggests that alt text doesn’t have much impact on rankings. However, there are other really important reasons we would implement alt text, and we decided to deploy this anyway. Number one being accessibility.

Alt text helps your images become more accessible for those that maybe can’t see them, and it helps bots be able to explain what’s on the page. Or if something is just not rendering, then it helps people be able to still know what they’re looking at. So alt text, although maybe not a big winner for SEO traffic, is still an important implementation and not something we want to forget about.

That’s all that I have to share with you today. If you thought this was interesting and you want to get more case studies like these, you can sign up to our case study email list, which every two weeks we release a case study email and that includes a different case study. You can also find all the ones that we’ve done in the past on our website. So even if you can’t run SEO tests or you’re not a large enterprise website, you can still use the learnings that we have to help you make some business cases at your company.

Thanks for having me. Bye, Moz.